Showing posts with label Docker. Show all posts

Showing posts with label Docker. Show all posts

Sunday, 10 November 2019

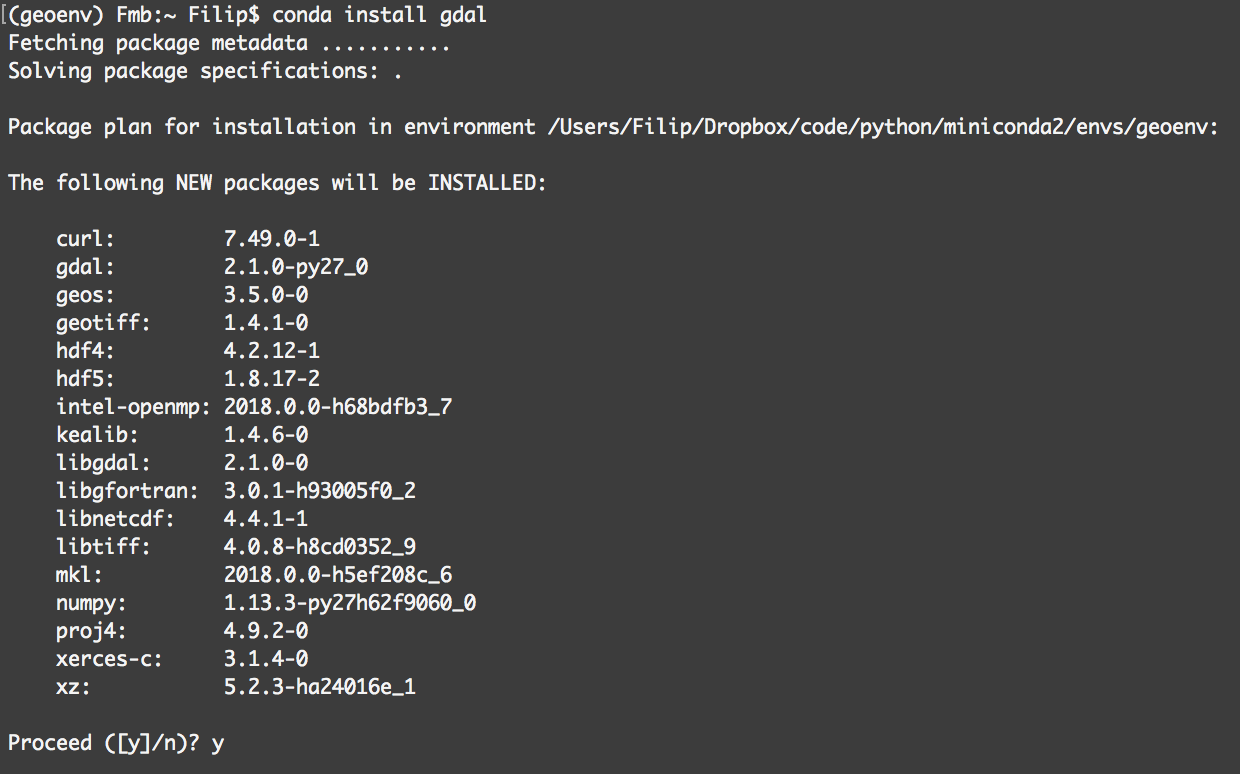

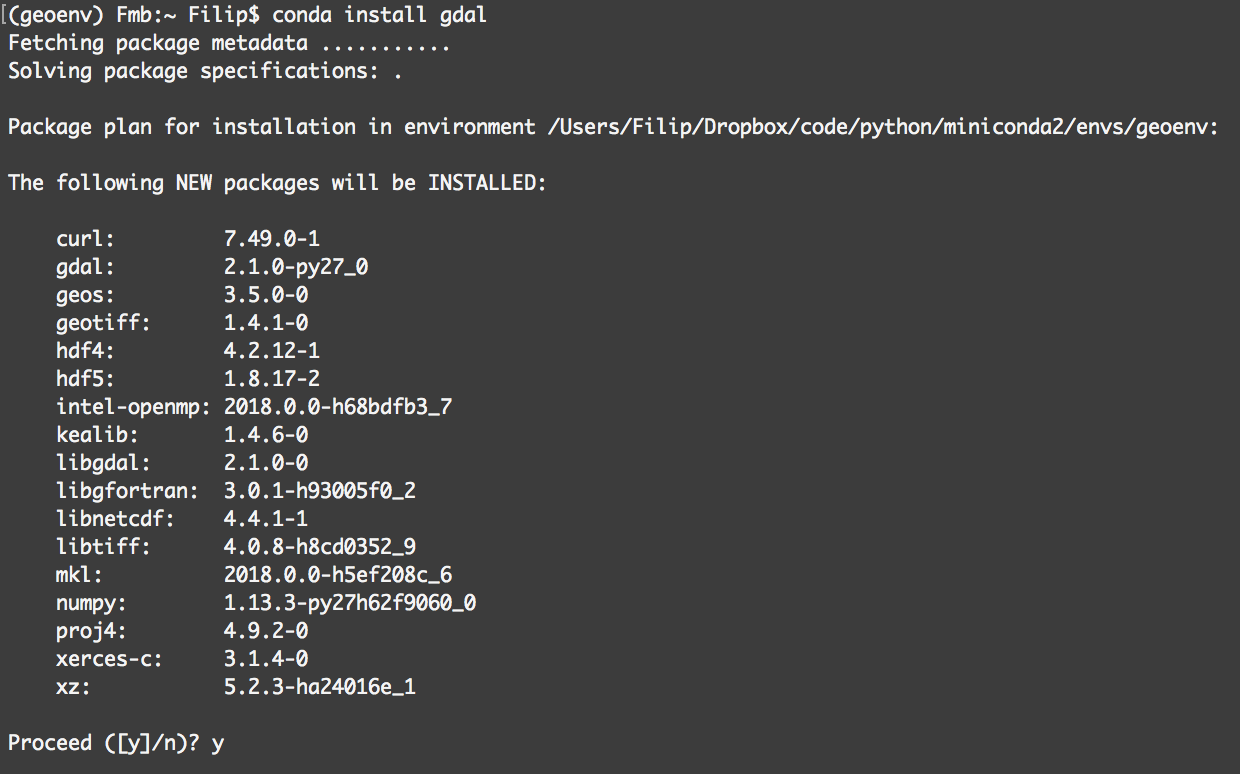

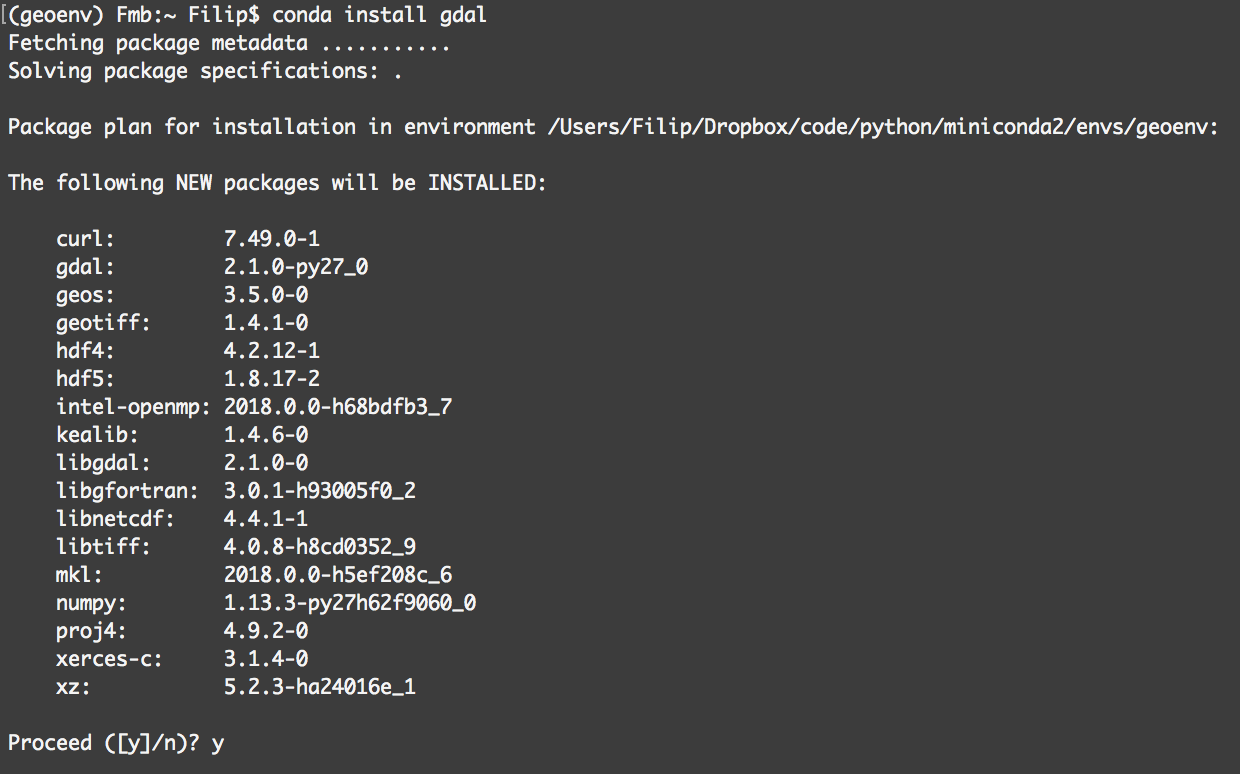

10 minutes guide to Bioconda

Bioinformatics is complicated, what with its arcane command-line interface, complex workflows, and massive datasets. For new bioinformaticians, installing the software can present a problem.

But the good news is that the Bioinformatics community has already a solution to this problem: BioConda + BioContainers.

But the good news is that the Bioinformatics community has already a solution to this problem: BioConda + BioContainers.

Etiquetas:

BioConda,

BioContainers,

BioDocker,

Bioinformatics,

Conda,

Containers,

Docker,

Large-Scale data analysis

Saturday, 29 August 2015

DIA-Umpire Pipeline Using BioDocker containers.

Monday, 24 August 2015

Moving Bioinformatics to the Cloud

Constantly we presence new technologies being developed and streamed to public, to researchers that work with molecular biology, the ones that get our attention normally comes from new laboratory methodologies or instruments. In this post, we are going to talk about a different situation that is calling the attention of researchers who work with molecular biology, and more specifically, bioinformatics, in a different way. I’m writing about a technological innovation that comes from the computational field and can have a great impact on how we do biological analysis with bioinformatics software.

A few years ago a cloud startup called dotCloud developed a new software called Docker, to be used only internally, the software made so much success that just after two years releasing docker to the public, the newly Docker company has an estimate worth of $1 bn.

What is Docker and Why it Matters?

Docker has several ways to be employed in different environments, what it does is to basically, provide to the user isolated and containerized software that can be executed apart from the host operating system. It is very similar to what a Virtual Machine does, the difference is that there is no guest operating system. These containers use some system libraries and apply some abstraction layers to the execution of the software inside, in the end you have an isolated environment with a custom software inside that can be shared.

What this has to do with Bioinformatics?

Imagine that you are a senior researcher, or even a recently accepted student, trying to learn how to do some analysis. You are a lab specialist but computers are not your thing. Now imagine that the software you are trying to run needs a Linux operating system with a gcc compiler version 4.9.3 and some libraries like GD. Sounds bad right? That’s where Docker comes in. Docker allows developers to ship software inside a container, that is, a custom environment with all the necessary tools and configuration to run a specific program, what you have to do if just download the container and execute the program inside. Running a Docker container is just as simple as running a program in the command line.

Benefits for Bioinformatics

For a bioinformatician this brings several other benefits. Something that is getting attention today is how to deal with reproducible research in the bioinformatics field. Different computers with different configurations, libraries and software versions can produce different results when comparing results from different software. If we had the chance to transform the environment variable into a constant, that problem would be reduced a lot.

The BioDocker Project

In 2014, a new project called BioDocker was founded. Recently, the project assumed a community-driven policy, the main idea is to get feedback from the community and to enjoy the specialty of each member. The goal here is to provide containerized bioinformatics tools to the general public. For developers bioinformaticians, the project also provides specifications, settings and guidelines on how to produce your own Biodocker containers. Defining guidelines like that we hope that the use of Docker become more common, helping people to deal more easily with different software and to reduce the problem with the reproducible research.

Wrapping up

Docker is a new technology that is gaining a lot of space nowadays, and slowly , it is getting some space in the bioinformatics field as well. It is definitively worth to get some time to learn how to work with it.

Sunday, 10 May 2015

A Trans-Proteomic Pipeline (TPP) Docker container

By +Felipe Leprevost & +Yasset Perez-Riverol

In my initial post in this blog, I will teach you how to use a Docker container with the Trans-Proteomic Pipeline software installation.

Docker is a great new technology that allows us to create GNU/Linux containers with specific software inside. All kinds of software can be "containerized", including ones that rely on graphical user interfaces.

The whole idea of using a Docker container is built on having a software that is isolated from the host OS and can interact with the outside world. GNU/Linux containers, like Docker, are very useful even in the scientific world where bioinformatics applications are used every day.

Using Docker with bioinformatics software helps to solve some issues we face, like reproducibility, for example. We wrote about this last year [1] . You can also check for more containers with bioinformatics applications in the BioDocker webpage.

Here I am going to describe to you how to install and use one of the most powerful software for proteomics data analysis, the Trans-Proteomics Pipeline (TPP).

With a Docker TPP container you can just download it and use it on the command line, the container it self behaves like an executable, so image the possibilities.

Lets begin preparing your environment for Docker. The first thing you have to do is to install some libraries that are essential for the Docker daemon to run properly. If you are running an Ubuntu OS, you can skip this step. If you are on a different OS, like Linux Mint for example you need to follow these steps.

In my initial post in this blog, I will teach you how to use a Docker container with the Trans-Proteomic Pipeline software installation.

Docker is a great new technology that allows us to create GNU/Linux containers with specific software inside. All kinds of software can be "containerized", including ones that rely on graphical user interfaces.

The whole idea of using a Docker container is built on having a software that is isolated from the host OS and can interact with the outside world. GNU/Linux containers, like Docker, are very useful even in the scientific world where bioinformatics applications are used every day.

Using Docker with bioinformatics software helps to solve some issues we face, like reproducibility, for example. We wrote about this last year [1] . You can also check for more containers with bioinformatics applications in the BioDocker webpage.

Here I am going to describe to you how to install and use one of the most powerful software for proteomics data analysis, the Trans-Proteomics Pipeline (TPP).

Unfortunately, if you are a GNU/Linux user (like me) and your job involve MS/MS data analysis (also, like me), you will probably have some harsh time trying to install TPP. Almost all the tutorials available on the Web focus on the Windows users, so novice bioinformaticians or those that are not too versatile with GNU/Linux can have some hard times.

With a Docker TPP container you can just download it and use it on the command line, the container it self behaves like an executable, so image the possibilities.

Lets begin preparing your environment for Docker. The first thing you have to do is to install some libraries that are essential for the Docker daemon to run properly. If you are running an Ubuntu OS, you can skip this step. If you are on a different OS, like Linux Mint for example you need to follow these steps.

Etiquetas:

Bioinformatics,

Docker,

Linux,

proteomics,

TPP

Subscribe to:

Posts (Atom)